As artificial intelligence (AI) becomes increasingly central to the operations of America’s largest corporations, recent research reveals potential security vulnerabilities that could affect organisations and their customers.

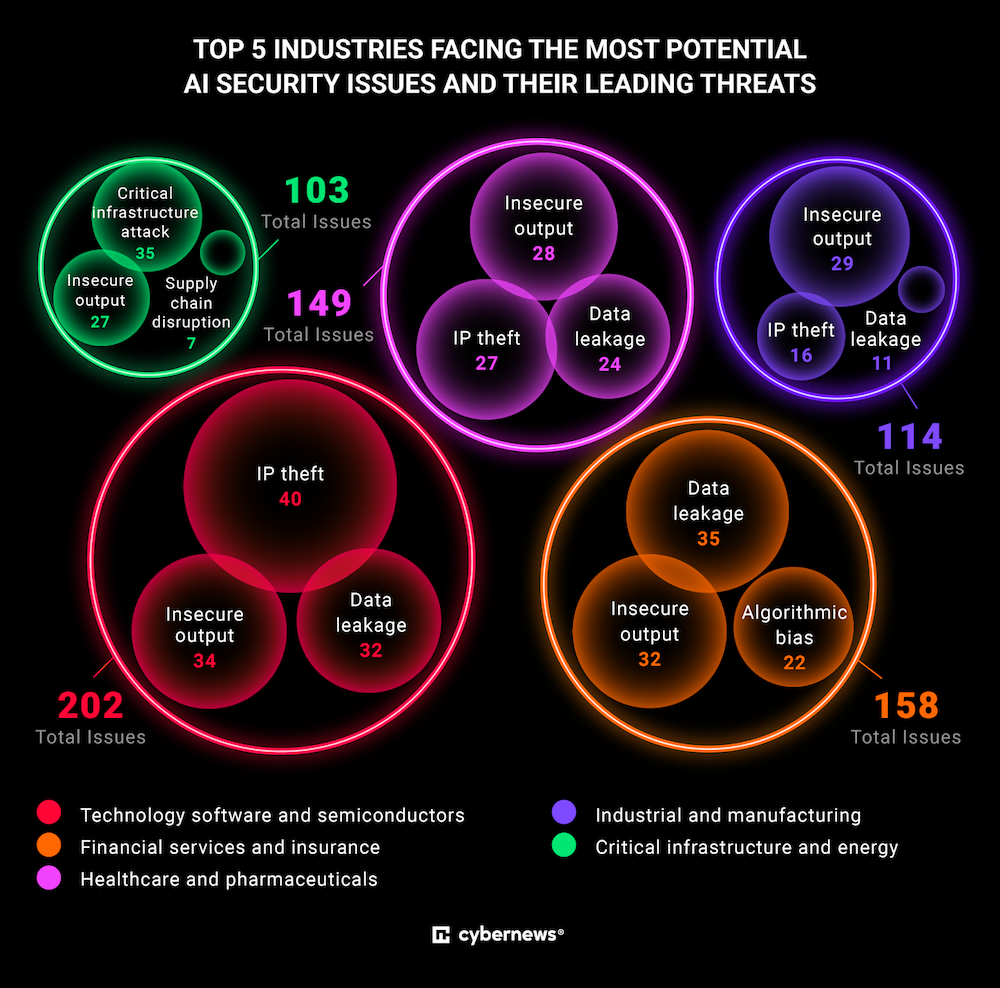

An analysis by cybersecurity experts at Cybernews examined AI deployments across the S&P 500 companies and uncovered close to 1,000 potential weak points that may lead to data exposure, theft of proprietary information, and erroneous AI actions.

The study found that 327 S&P 500 companies publicly report using AI tools in their operations in sectors including finance, healthcare, manufacturing, and energy.

While these tools have accelerated innovation and efficiency, safety measures have yet to fully catch up, leaving systems open to misuse or failure. This includes AI outputs that may be inaccurate or misleading, unintended disclosure of confidential data, and risks of corporate secrets being compromised.

Žilvinas Girėnas, head of product at nexos.ai, emphasised, “It’s not enough to deploy AI and hope for the best. Businesses need to develop AI with the same safety standards as airplanes: constant oversight, clear guardrails, and a zero-trust approach. Every AI decision must be considered potentially wrong until proven correct, and every input must be monitored to prevent sensitive data from leaking or trade secrets from escaping.”

The potential vulnerabilities extend across multiple industries. Technology and semiconductor companies are especially vulnerable to data leaks and intellectual property (IP) risks. Financial institutions might face challenges protecting client data while ensuring AI does not reinforce unfair bias in lending.

Healthcare providers carry the added responsibility of protecting patients from flawed AI-driven recommendations. Meanwhile, industrial and infrastructure sectors must guard against disruptions that could affect critical services, such as power supply or supply chain operations.

For consumers, the consequences are tangible. Unsecured AI systems risk leaking private details ranging from medical histories to financial records, while flawed AI judgments could influence decisions that directly affect people’s health and finances.

As AI tools play a larger role in retail, banking, transportation, and other areas, protecting these technologies becomes essential for public protection.

The report highlights past incidents that illustrate these dangers. IBM’s Watson once offered unsafe cancer treatment suggestions. Apple’s credit system faced scrutiny after allegations of gender bias. Zillow’s AI-driven pricing led to substantial financial losses. Additionally, Samsung experienced unintended source code disclosures due to inappropriate use of AI chatbots by employees.

“AI is becoming more deeply embedded in business operations, and the risks are multiplying. The lessons from all these incidents are clear: unchecked deployment without robust security and oversight leads to real-world failures,” said Vareikis.

As AI further transforms businesses, past incidents and potential threats show how crucial it is to improve security strategies in parallel.